Training

1. Define generalisation.

2. Define hyperparameters.

3. State the difference between parameters and hyperparameters.

4. Define the holdout method (Train-Test Split).

5. Explain how holdout method (Train-Test Split) works.

- Split the data into Training Data (70-80%) and the remainder to Test Data (20-30%)

- The training set is used to train the machine learning model.

- The testing set is used to evaluate the model’s performance using the appropriate metric.

6. Define data leakage and the implications.

7. Data leakage can occur when future information is included in the training data. Elaborate on this concept and provide an example.

Training data inadvertently includes information from the future that the model would not have access to in a real-world scenario.

For example, if you’re predicting stock prices, using features that include future stock prices or news articles published after the date you want to predict would be considered data leakage.

8. Data leakage can occur because of data preprocessing errors. Elaborate on this concept and provide an example.

Errors in data preprocessing, such as normalising or scaling features, can introduce data leakage.

For example, if you scale the entire dataset, including the testing set, based on statistics calculated from both sets, it can lead to information leakage from the test set into the training set.

9. Data leakage can occur due to improper cross-validation implementation. Elaborate on this concept and provide an example.

When performing cross-validation, it’s essential to ensure that the validation set in each fold does not leak information from the training set.

For instance, if you preprocess your data (e.g., impute missing values) separately for each fold without considering the training set alone, you could introduce data leakage because the information from the validation set will influence the training set within that fold.

10. State the main issue with using only train-test split without a separate validation set.

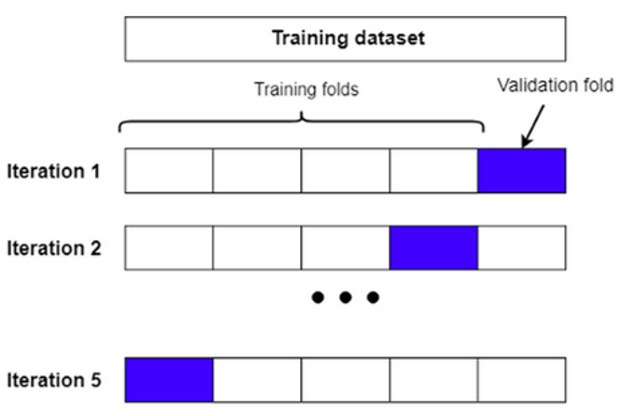

11. Explain how K-fold cross validation works.

Image Source: https://towardsdatascience.com/why-do-we-need-a-validation-set-in-addition-to-training-and-test-sets-5cf4a65550e0

- Split the training dataset into K folds, which is also the number of iterations.

- For each iteration

- Perform holdout method, take one group for test set, the rest will be training set.

- Fit a sub model on the training set and evaluate on the test set

- Retrain the score

- Average score of all sub models to get the cross-validated score of the model.

12. List the K-fold cross validation advantages.

- It reduces the impact of randomness in the data split because the model is evaluated on multiple different test sets.

- It provides a more accurate and stable estimate of how well the model generalizes to new data.

- It helps identify if the model’s performance varies significantly across different subsets of the data.

- It especially useful when working with limited data.